(Just move my unpublished paper from Notion to here)

Author: Tao Feng

Revision history:

| Revision Number | Date | Description |

|---|---|---|

| draft 1 | 8/5/2024 | Publish article |

| draft 1.1 | 8/6/2024 | Add abstract |

| draft 1.2 | 8/7/2024 | Update abstract |

| draft 1.3 | 8/8/2024 | Add an interactive 3D graph to better demonstrates the non-linear dynamic characteristics in LLMs to 4.1.2 |

| draft 1.4 | 8/11/2024 | Add 1 example and 1 figure to 4.2.2 |

| draft 2 | 8/15/2024 | 4.3.1 has been completely rewritten |

| draft 2.1 | 8/23/2024 | Remove some redundant content in section 4.3.3 |

| draft 3 | 8/25/2024 | Remove section 4.4.3 “The necessity of improving LLM adaptability and its significance for achieving true artificial intelligence “ as it is not closely related to the main topic |

| draft3.1 | 8/26/2024 | Add some reference papers to section 4.5.1 |

| draft3.2 | 9/5/2024 | Revise some content in section 4.5.2 that lacks rigor in its descriptions |

💡 Abstract:

In the rapidly advancing wave of artificial intelligence (AI), Large Language Models (LLMs) have emerged as a shining star, not only excelling in natural language processing tasks but also demonstrating remarkable potential in various cross-domain applications. From the early BERT and GPT to today’s GPT-4o, Llama3, Gemini 1.5, and Claude3.5, LLMs have continuously broken through in scale and capability. Their increasingly powerful language understanding and generation abilities indicate that artificial intelligence is progressing towards more advanced cognitive capabilities.

However, with the explosive growth in scale and complexity of LLMs, we face unprecedented challenges in understanding and controlling their behavior. The traditional perspective that views LLMs as mere stacks of algorithms and code is a simplified understanding that struggles to explain their emergent complex behaviors and characteristics. Thus, a new perspective has arisen: viewing LLMs as complex systems.

Complex systems theory studies systems composed of a large number of interacting components, which typically exhibit nonlinear behavior, self-organizing capabilities, and emergent properties. From ecosystems to socio-economic systems, complex systems are ubiquitous, and LLMs possess many characteristics of complex systems, such as self-organization, emergent behavior, feedback loops, and more.

By drawing on concepts from complex systems theory, such as nonlinearity, emergence, self-organization, adaptability, feedback loops, and multi-scale properties, we can construct a novel framework for understanding the behavior and characteristics of LLMs. This interdisciplinary perspective not only helps explain the puzzling behaviors of these models, such as unpredictability and sensitivity to initial conditions, but also provides guidance for developing more advanced and reliable AI systems. For example, by studying emergent properties to design new evaluation metrics, or utilizing self-organization principles to develop new training methods.

This article aims to delve deep into the characteristics of LLMs as complex systems and analyze the potential impact of this new perspective on our understanding and improvement of LLMs. We will start from the complex system features of LLMs, explore their impact on model performance, interpretability, and ethical issues, and ultimately look ahead to future research directions. We believe that this interdisciplinary perspective will provide AI researchers and practitioners with a new framework for thinking, promote a deeper understanding of LLMs, and ultimately drive AI systems towards more intelligent and reliable development.

Outline:

- Introduction

- Definition and importance of Large Language Models (LLMs)

- Basic concepts of complex systems

- A new perspective of viewing LLMs as complex systems

- Overview of the main points and structure of the article

- Basic architecture of large language models

- Neural network structure

- Training data and methods

- Parameter scale and computational complexity

- Analogies of LLMs as complex systems

- Overview of the similarities between LLMs and biological systems

- Organic growth, self-organization, and emergent properties of LLMs

- Complex system characteristics of large language models 4.1 Nonlinearity

- Nonlinear relationships between inputs and outputs

- Nonlinearity in model structures and mechanisms

- Unpredictability of model behavior

- Impacts and challenges of nonlinearity

- Emergence of language understanding capabilities

- Emergence of reasoning and creativity

- Other emergent properties exhibited by LLMs

- Self-adjustment during the training process

- Self-organization of knowledge representation

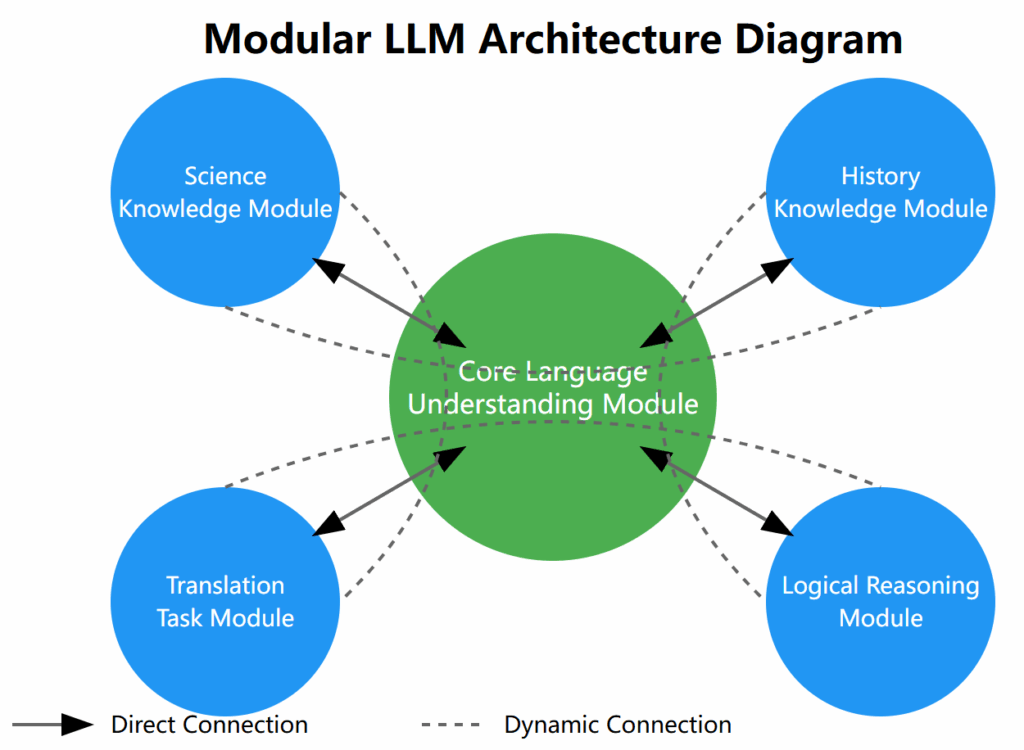

- Modular self-organization

- Concept of modularity in complex biological systems

- Possible modular architectures in LLMs

- Interactions of specialized subsystems and their impact on model capabilities

- Transfer learning capabilities

- Adaptation to new tasks and domains

- Influence of model output on subsequent input

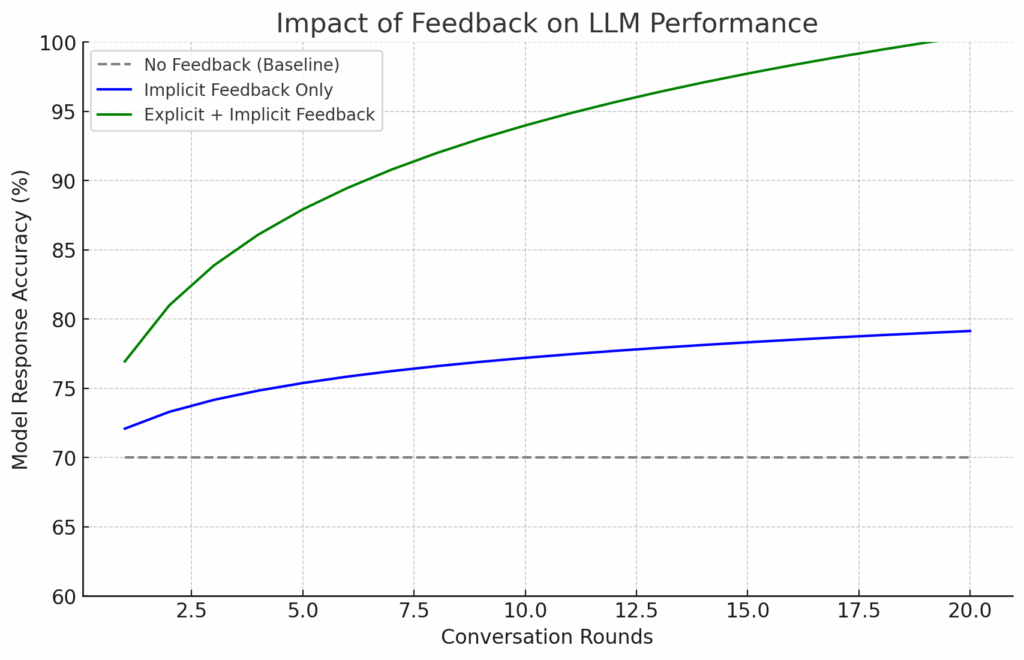

- Feedback mechanisms in human-computer interactions

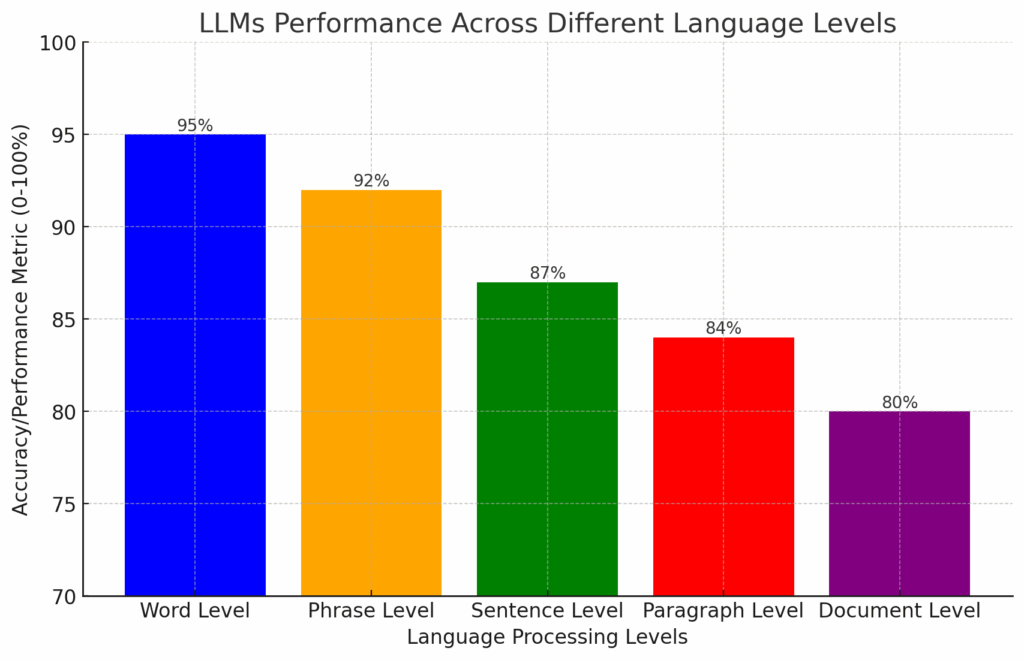

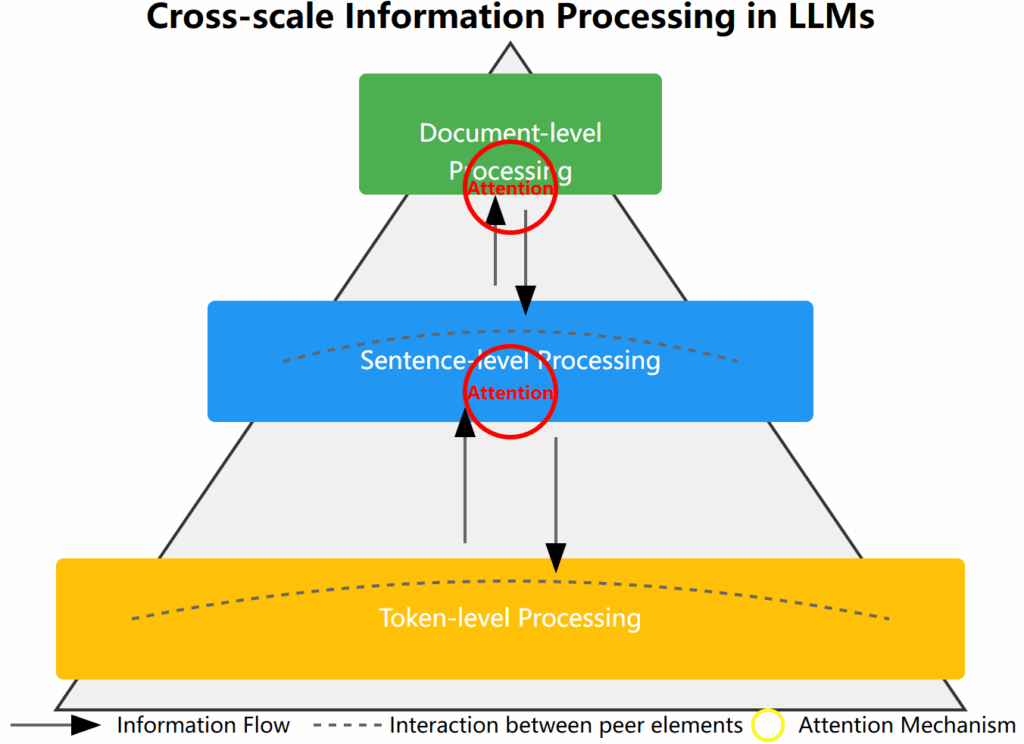

- Language processing from word level to document level

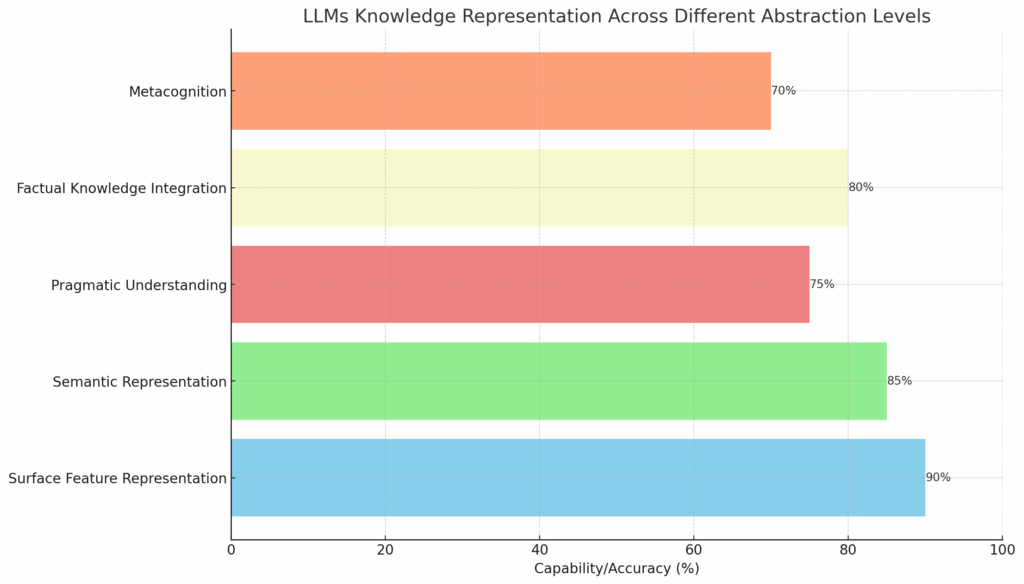

- Knowledge representation at different levels of abstraction

- Cross-scale interactions in LLMs

- Processing of language information at token, sentence, and document levels

- The importance of multilevel processing for model understanding and generative capabilities

- Exchange of information with the external environment

- Potential for continuous learning and updating

- In-depth application of complex system theory in LLMs 5.1 Modularity

- Concept of modularity in complex biological systems

- Possible modular architectures in future LLMs

- Interactions of specialized subsystems and their impact on model capabilities

- Cross-scale interactions in LLMs

- Processing of language information at token, sentence, and document levels

- The importance of multilevel processing for model understanding and generative capabilities

- Explaining the contribution of diversity to robustness in ecosystems

- Exploring the impact of diverse training data and architectural elements on LLMs

- Analyzing how diversity can improve model generalization capabilities

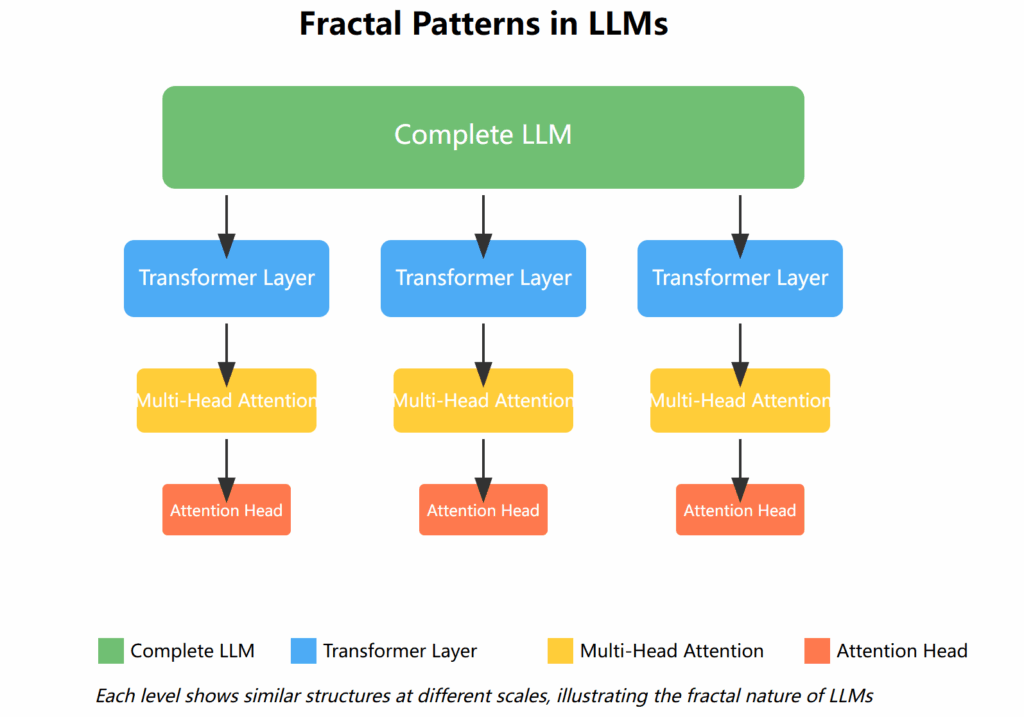

- Self-similarity exhibited by LLMs at different scales

- Similarities with fractal patterns in complex systems

- The impact of these properties on LLM performance and behavior

- Criticality and critical slowing down phenomena in complex systems

- Current development state and potential critical points of LLMs

- Future paradigm shifts in architecture or training methods

- Future research directions

- Application of complex system theory in the optimization of large language models

- Methods to improve model interpretability and controllability

- Strategies to enhance model capabilities by leveraging complex system characteristics

- Exploration of improving model effectiveness in real-world applications

- Interdisciplinary collaboration: Opportunities for cooperation between complex systems researchers and AI researchers

- Conclusion

- Summary of the main characteristics of LLMs as complex systems

- Emphasizing the importance of understanding these characteristics for the future development of AI

- Outlook on the research prospects of LLMs based on complex system theory

💡 Disclaimer: This article is not a mature and rigorous paper but a “theoretical exploration” technical article. The charts and data presented in this article include estimates. These estimates are based on the information currently available, expert opinions, and the author’s analysis but may contain uncertainties. Readers should understand that these figures are mainly used to illustrate concepts and trends rather than precise predictions or measurement results. As research progresses and new data emerge, these estimates may be adjusted. We encourage readers to critically view these data and refer to the latest research findings to form their own judgments.

1. Introduction

The emergence of Large Language Models (LLMs) marks the beginning of a new era in the field of artificial intelligence and natural language processing, profoundly changing the way we interact with technology and redefining our understanding of AI potential. This article aims to explore a novel and insightful perspective: viewing large language models as complex systems, and analyzing their characteristics, potential, and challenges from this perspective.

1.1 Definition and Importance of Large Language Models

Large language models are AI systems based on deep learning technology, particularly neural networks with Transformer architecture, trained on massive text data. These models typically contain billions to trillions of parameters, capable of understanding, generating, and manipulating human language, performing various NLP tasks such as text generation, translation, question answering, and summarization.

The importance of LLMs is reflected in multiple aspects:

- Technological breakthroughs: LLMs represent a significant leap in natural language processing, demonstrating language understanding and generation capabilities that approach or even exceed human levels.

- Wide applications: From intelligent assistants to content creation, from code generation to scientific research, LLMs have found applications in various fields.

- Cross-domain impact: LLMs not only change the AI field but also have profound impacts on education, healthcare, law, and other industries.

- Scientific value: Researching LLMs helps us better understand human language and cognitive processes.

- Social impact: The development of LLMs raises important social issues about AI ethics, employment impacts, and more.

1.2 Basic Concepts of Complex Systems

Complex systems are systems composed of a large number of interacting components, whose overall behavior is often difficult to predict by studying individual parts alone. These systems typically exhibit the following characteristics:

- Nonlinearity: The system’s output does not have a simple proportional relationship with its input.

- Emergence: The overall behavior exhibited by the system cannot be inferred merely from its components.

- Self-organization: The system can form ordered structures without external intervention.

- Adaptability: The system can adjust its behavior according to environmental changes.

- Feedback loops: The system’s output affects its future input, forming a complex network of causal relationships.

- Multiscale properties: The system exhibits different behaviors and characteristics at different levels.

- Openness: The system has continuous exchanges of matter, energy, or information with its environment, allowing it to continually evolve and adapt.

These characteristics enable complex systems to exhibit rich and diverse behaviors and dynamic evolution capabilities. The concept of complex systems is widely applied in physics, biology, social sciences, and other fields, providing new perspectives for understanding and analyzing complex phenomena. These characteristics will help us more comprehensively understand the behavior and potential of large language models.

1.3 A New Perspective of Viewing LLMs as Complex Systems

Viewing LLMs as complex systems, we can draw on a vivid analogy: LLMs are more like plants or laboratory-grown tissues rather than traditional software programs. Just as researchers build frameworks, add culture mediums, and initiate growth processes, AI researchers design model architectures, provide training data, and initialize learning processes. Once this process begins, the model autonomously develops and evolves in a somewhat unpredictable manner.

This analogy highlights several key characteristics of LLMs: organic growth, self-organization, and emergent properties. Just as complex biological systems may exhibit unexpected behaviors, LLMs may also demonstrate capabilities that were not explicitly programmed.

1.4 Overview of the Main Points and Structure of the Article

The core goal of this article is to analyze large language models within the theoretical framework of complex systems, exploring their complex system characteristics. We will delve into the following aspects:

- Nonlinearity: Analyzing how nonlinear activation functions and attention mechanisms in LLMs lead to complex nonlinear behaviors.

- Emergence: Exploring how LLMs generate intelligent behaviors that go beyond simple component aggregation based on large-scale parameters and data.

- Self-organization: Analyzing how LLMs spontaneously form internal knowledge representations and structures during training.

- Adaptability: Studying how LLMs adapt to new tasks and domains through transfer learning and fine-tuning.

- Feedback loops: Exploring how LLMs continuously optimize their output through feedback in human-computer interactions.

- Multiscale properties: Analyzing how LLMs process language at multiple scales, such as words, sentences, paragraphs, and documents.

- Openness: Discussing the interaction of LLMs with external environments and the potential for continuous learning.

Through this perspective of complex systems, we aim to provide a more comprehensive and in-depth framework for understanding the essential characteristics, potential, and limitations of large language models. This understanding not only helps drive the technological development of LLMs but also offers new insights for addressing the social and ethical challenges they bring.

In the following chapters, we will first review the basic architecture of LLMs, then explore the various complex system characteristics of large language models in detail, revealing their internal mechanisms, and analyzing the impact of these characteristics on LLM performance, interpretability, and ethical issues. Finally, we will look into future research directions, discussing how this new perspective can guide the design and development of more advanced and reliable AI systems.

Through the discussion in this article, we hope to provide AI researchers and practitioners with a new framework for thinking, promoting a deeper understanding of LLMs, and providing valuable insights for the future development of AI systems. In today’s rapidly evolving field of artificial intelligence, this interdisciplinary perspective may lead to breakthrough advancements, pushing us toward more intelligent and reliable AI systems.

2. Basic Architecture of Large Language Models

The impressive capabilities of large language models (LLMs) stem from their complex and intricate architectural design. To understand the characteristics of LLMs as complex systems, we first need to delve into their basic architecture. This architecture primarily includes three key aspects: neural network structure, training data and methods, and parameter scale and computational complexity.

2.1 LLM Neural Network Structure

The core of LLMs is a neural network architecture called Transformer, proposed by Vaswani et al. in 2017. The key innovation of the Transformer architecture is its “attention mechanism,” which allows the model to dynamically focus on different parts of the input sequence, thus more effectively handling long-distance dependencies.

The Transformer architecture mainly consists of the following components:

- Input embedding layer: Converts input tokens into vector representations.

- Positional encoding: Adds positional information to each token.

- Multi-head attention layers: Allow the model to focus on different aspects of the input simultaneously.

- Feed-forward neural network layers: Perform nonlinear transformations.

- Layer normalization: Stabilizes the training process.

- Residual connections: Help with gradient flow and information transmission.

In LLMs, these components are typically stacked multiple times to form deep networks. For example, GPT-3 uses 96 layers of Transformer decoders.

2.2 Training Data and Methods

The training data for LLMs usually consists of large-scale text corpora collected from the internet, including web pages, books, articles, and social media content. The diversity and scale of this data are crucial to the model’s performance.

The main training methods include:

- Unsupervised pre-training: The model undergoes self-supervised learning on large-scale unlabeled data, typically using language modeling tasks (predicting the next word).

- Supervised fine-tuning: Fine-tuning on labeled data for specific tasks to adapt to specific applications.

- Few-shot learning: Enabling the model to quickly adapt to new tasks by providing a few examples.

- Instruction tuning: Improving model behavior and output quality through human instructions and feedback.

The training process typically employs distributed computing techniques, using a large number of GPUs or TPUs for parallel processing.

2.3 Parameter Scale and Computational Complexity

A notable feature of LLMs is their enormous parameter scale. The number of parameters has rapidly grown from millions in early models to hundreds of billions or even trillions now. For example:

- GPT-3: 175 billion parameters

- PaLM: 540 billion parameters

- GPT-4: Estimated 1.75 trillion parameters

The growth in parameter scale has brought significant performance improvements but also greatly increased computational complexity. Training a large LLM may require hundreds or thousands of GPUs, lasting for weeks or months, consuming massive computational resources and energy.

Computational complexity is mainly reflected in the following aspects:

- Training time: As the parameter scale increases, training time grows superlinearly.

- Inference latency: Large models may have higher latency during inference, posing challenges for real-time applications.

- Storage requirements: Storing and loading large models require substantial memory and high-speed storage devices.

- Energy consumption: Training and running large LLMs require significant electricity, raising discussions about the environmental impact of AI.

Despite these challenges, researchers are exploring various methods to improve the efficiency of LLMs, such as model compression, knowledge distillation, and sparse activation techniques, to reduce computational demands while maintaining performance.

In summary, the basic architecture of LLMs – including their neural network structure, training data and methods, and the enormous parameter scale and computational complexity – collectively form a highly complex and dynamic system. This complexity is reflected not only in the model’s scale but also in the complex interactions of its internal components and emergent capabilities. It is this complexity that allows LLMs to exhibit many characteristics of complex systems, such as nonlinearity, self-organization, and emergence, which will be discussed in detail in the following chapters.

3. LLMs as Analogies of Complex Systems

When exploring the complexity of large language models (LLMs), drawing an analogy to biological systems, particularly plants or laboratory-cultured tissues, provides a unique and insightful perspective. This analogy not only helps us understand the characteristics of LLMs more deeply but also offers new ideas for their development and application. Through this interdisciplinary comparison, we can re-examine artificial intelligence from a biological perspective, revealing deep connections between these seemingly unrelated fields.

3.1 Overview of Similarities Between LLMs and Biological Systems

LLMs and biological systems, especially plants, demonstrate striking similarities in multiple aspects. These similarities are not only manifested in surface features but also reflected in their internal mechanisms and ways of interacting with the environment.

First, from the growth process perspective, both LLMs and plants undergo an evolution from simple to complex. Plants start from a single seed and gradually develop complex structures like roots, stems, and leaves in suitable environments, eventually forming a functionally complete organism. Similarly, LLMs start from initial random weight matrices and, through continuous training processes, gradually develop complex language understanding and generation capabilities. This process can be analogized to the continuous strengthening and reorganization of connections in neural networks, forming “neural pathways” capable of handling complex language tasks.

Environmental dependency is another significant similarity. Just as plant growth depends on environmental factors such as sunlight, water, and nutrients, the “growth” of LLMs similarly depends on their “environment” – the quality and diversity of training data, available computational resources, and the quality of algorithm design. Just as a lack of certain nutrients can lead to stunted or abnormal plant growth, biases or defects in training data can also cause LLMs to produce inappropriate or biased outputs.

Adaptability is a key feature of biological systems, which is also reflected in LLMs. Plants can adapt to different environmental conditions by changing growth direction, adjusting leaf angles, and other means. Similarly, LLMs can adapt to new tasks and domains through fine-tuning and transfer learning. For example, a model trained on general text can be adapted to parse legal texts through fine-tuning, similar to how plants adapt to new climate conditions.

Complexity and unpredictability are common features of both systems. Plants may develop complex branching structures during growth and sometimes exhibit unpredictable growth patterns. Likewise, as training progresses, LLMs form complex knowledge representation networks internally and sometimes demonstrate unexpected abilities or behaviors. This emergent quality is a hot topic of research in both systems and is also the most difficult aspect to predict and control.

The formation process of internal structures also shows similarities. Plant cells differentiate to form different tissues and organs, each with specific functions. In LLMs, although there are no explicit physical partitions, research suggests that “functional modules” that specifically handle different types of information or tasks may form within the model. This functional differentiation enables the model to efficiently handle diverse language tasks.

Moreover, both systems demonstrate the ability to process and store information. Plants process environmental information through complex biochemical networks and store genetic information in DNA. LLMs process language information through neural networks and “store” learned knowledge in model parameters. Although the mechanisms differ, both can extract, process, and preserve key information from the environment.

Finally, it’s worth noting the vulnerability and robustness of both systems. Plants may suffer damage in extreme environments but usually have some recovery ability. Similarly, LLMs may produce erroneous outputs when faced with adversarial inputs or out-of-domain data, but their robustness can be enhanced through appropriate training and design.

This analogy not only helps us better understand LLMs but also provides new ideas for their future development. For example, the modular growth pattern of plants might inspire the design of more flexible and scalable AI architectures; the rapid response mechanism of plants to environmental stimuli might provide inspiration for developing more agile online learning algorithms.

However, we also need to recognize the limitations of this analogy. LLMs are, after all, artificial systems, and their “growth” process is under human control, unlike the autonomy of plants. Moreover, LLMs currently lack true self-replication and evolutionary capabilities, which are essential features of biological systems.

Overall, by drawing an analogy between LLMs and biological systems, particularly plants, we can gain new insights into these complex AI systems. This interdisciplinary perspective not only enriches our understanding of LLMs but also provides new ideas and inspiration for the design and development of future AI systems.

3.2 Organic Growth, Self-Organization, and Emergent Properties of LLMs

Large language models (LLMs) exhibit organic growth, self-organization, and emergent properties that make them similar to complex biological systems in many ways. These characteristics not only reveal the inherent complexity of LLMs but also provide important insights for understanding and developing the next generation of AI systems.

Organic Growth

The “growth” process of LLMs bears striking similarities to the growth of organic entities. Just as a tree grows from a seed by absorbing sunlight, water, and nutrients to gradually become a large tree, LLMs also undergo a similar evolutionary process.

This process begins with the “seed” stage – the initialized model. At this stage, the model is like a newly sprouted seed, with unlimited potential but limited abilities. As training progresses, the model begins to “absorb nutrients” – here, “nutrients” refer to training data and computational resources. Through continuous “nutrient supply,” the model gradually develops and refines its capabilities, much like a plant gradually growing branches and leaves and forming complex structures.

This growth process is gradual and continuous, rather than sudden or discrete. For example, during the pre-training process, the model first learns basic language patterns, such as simple word order rules and the use of common vocabulary. As training deepens, it gradually masters more complex language structures, such as long-distance dependencies and the expression of abstract concepts. Finally, the model begins to demonstrate understanding of world knowledge and complex reasoning abilities. This progressive learning process closely resembles the growth process of a plant from seedling to mature plant.

It’s worth noting that the “growth” of LLMs is also profoundly influenced by their “growth environment.” Just as plants will exhibit different growth states under different soil and climate conditions, LLMs will develop different abilities and characteristics under different training datasets and hyperparameter settings. For example, a model trained on domain-specific data may excel in that domain but perform poorly in others, similar to plant varieties adapted to specific environments.

Self-Organization

Self-organization is a key feature of complex systems, which is also evident in LLMs. Although researchers provide the basic architecture (such as the Transformer structure) and training methods (such as autoregressive language modeling) for the model, the specific knowledge representation and “functional modules” within the model are spontaneously formed, rather than pre-designed or explicitly programmed.

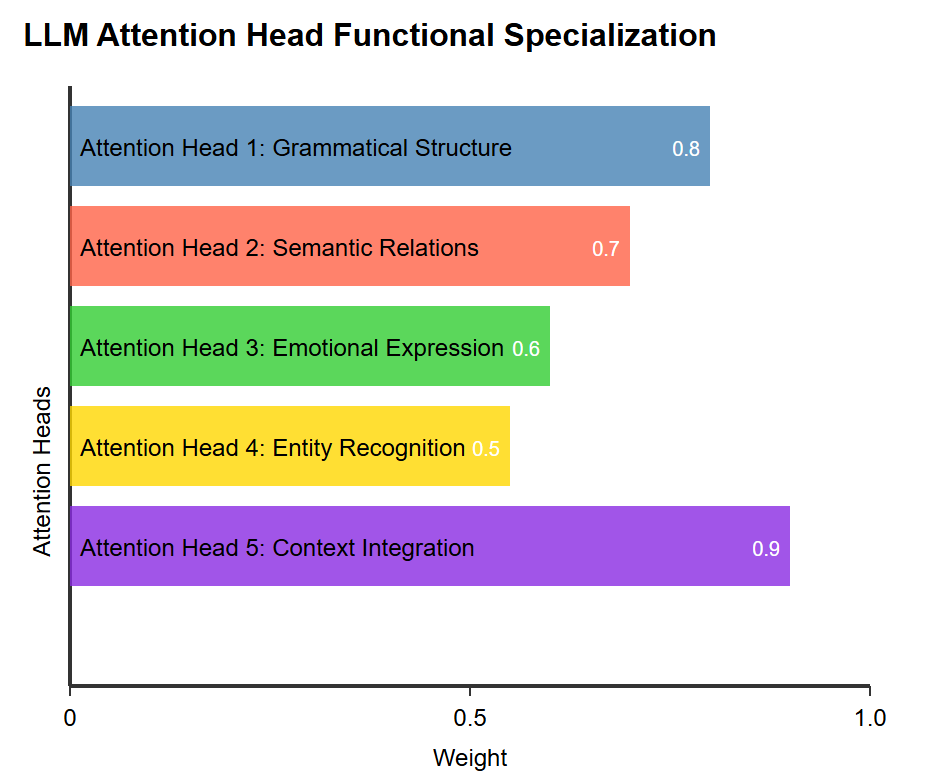

This self-organizing behavior is particularly evident in LLMs. For example, research has shown that different attention heads in Transformer models may spontaneously specialize in handling different types of language features. Some attention heads may focus more on capturing syntactic relationships, while others may focus on semantic associations or emotional expressions. This self-organized functional differentiation enables the model to efficiently handle complex language tasks, similar to the functional division of different organs in biological organisms.

Even more astonishing is that as the model scale increases, we observe more complex self-organizing behaviors. Large models may form structures similar to “neuron clusters,” with these “clusters” specifically processing certain types of information or tasks. For instance, research has found that certain neuron groups may specialize in handling mathematical operations, while others may specialize in sentiment analysis. This spontaneous functional modularization greatly enhances the model’s capabilities and efficiency.

Emergent Properties

Emergence is also a key feature of complex systems, referring to overall characteristics or abilities exhibited by the system that cannot be explained or predicted solely from its components. LLMs demonstrate significant emergent properties, which may be one of their most striking and controversial aspects.

Some astonishing emergent properties include:

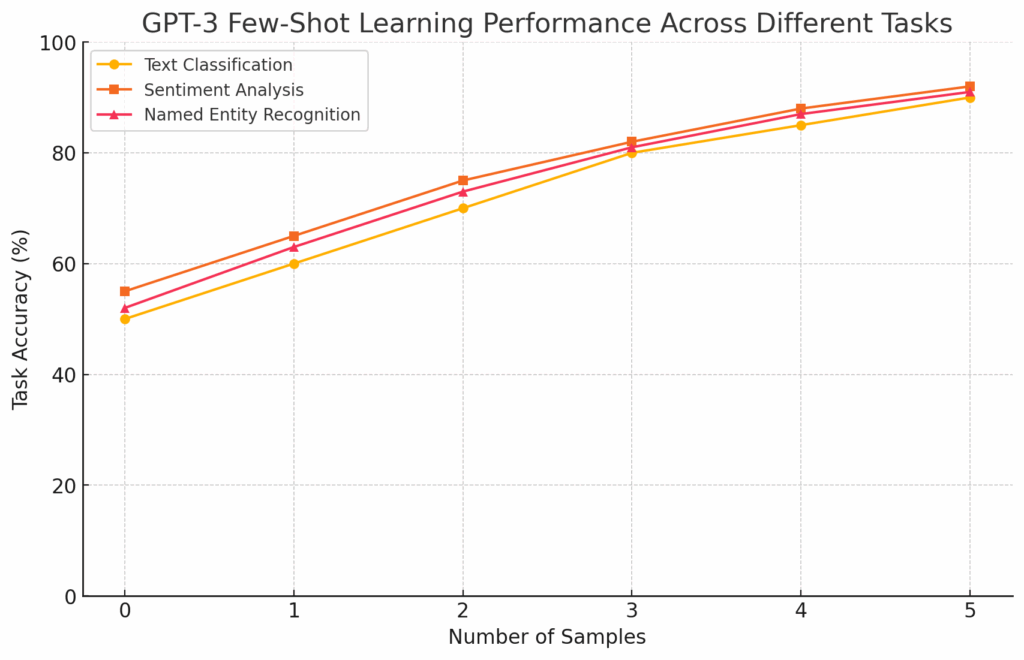

- Few-shot learning ability: Despite not being specifically trained for this, large LLMs can learn new tasks through a few examples. This ability is similar to humans’ rapid learning ability, suggesting that the model may have formed some kind of “meta-learning” mechanism.

- Cross-domain reasoning: The model can apply knowledge from one domain to another seemingly unrelated domain. For example, GPT-3 has demonstrated the ability to apply principles of physics to economic problems, a kind of analogical reasoning ability that is a hallmark of advanced intelligence.

- Creative expression: The model can generate original, creative content such as stories, poems, and even descriptions of music and artwork. This emergence of creativity challenges our traditional understanding of machine capabilities.

- Meta-learning ability: The model shows the ability to “learn how to learn,” capable of quickly adapting to new learning paradigms. This ability allows the model to rapidly adjust its strategy when faced with entirely new tasks.

- Self-reflection: Some advanced LLMs demonstrate “cognition” of their own abilities and limitations, capable of judging whether they have the ability to answer a question or need more information. This self-reflection ability approaches metacognition, an important feature of advanced intelligence.

These emergent properties often appear suddenly when the model scale reaches a certain critical point, similar to mutations or evolutionary leaps in biological systems. For example, research has found that when the model parameters reach a certain scale (usually in the range of tens of billions to hundreds of billions), the few-shot learning ability suddenly significantly improves. This phenomenon reminds us of the “punctuated equilibrium” theory in biological evolution, where species may experience rapid evolutionary leaps after long periods of stability.

However, these emergent properties also bring a series of problems and challenges. Firstly, the appearance of these abilities is often unpredictable, which brings difficulties to the design and control of AI systems. Secondly, the mechanisms of emergent abilities are not yet fully clear, which limits our understanding and utilization of these abilities. Finally, certain emergent abilities (such as creative expression) may raise ethical and legal issues, such as the copyright attribution of AI-generated content.

In summary, drawing an analogy between LLMs and biological systems, especially plants or laboratory-cultured tissues, provides us with an intuitive and insightful perspective. The organic growth, self-organization, and emergent properties of LLMs make them show striking similarities to complex biological systems. This analogy not only helps us better understand the nature of LLMs but also provides new ideas for the design and development of future AI systems. For example, we may need to rethink how to “cultivate” AI systems rather than just “train” them; how to create an “ecosystem” conducive to beneficial self-organizing behaviors; and how to predict, utilize, and control emergent properties.

These insights may lead to a fundamental shift in AI research paradigms, from traditional “top-down” design methods to more “bottom-up” cultivation methods. This approach may be closer to the development process of natural intelligence and has the potential to produce more powerful, flexible, and adaptive AI systems.

However, we also need to proceed with caution. Just as we cannot fully control the growth of organisms, we may face challenges in fully controlling the development of AI systems. Therefore, while pushing the development of AI technology, we need to deeply study the behavior and impact of these systems, establish corresponding ethical frameworks and regulatory protocols to ensure that the development direction of AI aligns with human interests and values.

4. Complex System Characteristics of Large Language Models

4.1 Nonlinearity

Nonlinearity is a core feature of complex systems, and it is particularly evident in large language models (LLMs). This characteristic is not only reflected in the model’s internal structure and operating mechanisms but also in its external behavior and outputs. Nonlinearity enables LLMs to capture the subtleties and complexities of language, but it also brings some challenges.

4.1.1 Nonlinear Relationships Between Inputs and Outputs

The highly nonlinear relationship between inputs and outputs in large language models (LLMs) is a core manifestation of their complex system characteristics. This nonlinearity means that small changes in input can lead to significant differences in output, making the model’s behavior both powerful and difficult to predict.

Semantic sensitivity is an important aspect of the nonlinear characteristics of LLMs. The model can capture subtle semantic differences in inputs, causing slight changes in a word or phrase to potentially lead to completely different responses. This sensitivity allows the model to understand subtle contexts and situations, but it can also lead to instability in outputs. For example, consider the following two almost identical inputs:

- “The cat sat on the mat.”

- “The cat sat on the mat, purring contentedly.”

Despite the subtle difference between these two sentences, the LLM might produce drastically different continuations or responses. The first sentence might lead the model to describe the cat’s appearance or actions, while the second sentence might lead the model to focus more on the cat’s emotional state or the surrounding atmosphere.

Context dependency further enhances the non-linear characteristics of LLMs. The model’s output is highly dependent on the entire input sequence, not just individual words or phrases. This means that the same word can be interpreted and responded to in completely different ways depending on the context. For example, the word “bank” has entirely different meanings in “river bank” and “bank account”. Similarly, when an LLM translates the Chinese sentence “我喜欢苹果” (I like apples), it might translate it as “I like apple” or “I enjoy eating apples” based on the context, or it might even interpret it as a fondness for Apple Inc. products. LLMs need to process this context-dependency in a non-linear manner.

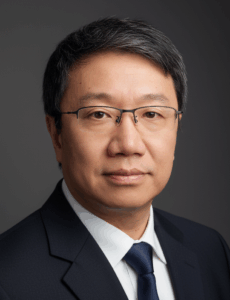

Long-distance dependencies are another crucial aspect of the non-linear characteristics of LLMs. These models can capture relationships between distant elements in the input, which is challenging for traditional linear models. This ability allows LLMs to handle complex narrative structures and long texts, but it also increases the uncertainty of the output. For instance, in a long article, a concept mentioned at the beginning might influence the generation of the ending, even if there’s a large amount of unrelated text in between. Another example is when an LLM is generating a story, it might develop completely different endings based on earlier plot points, even if only one character’s background is changed.

[Figure 1: Illustration of Long-Distance Dependencies in LLM]

Finally, when processing multimodal inputs, LLMs exhibit even more complex nonlinear interactions. When the model simultaneously processes different forms of input such as text, images, audio, and video, the nonlinear interactions between these modalities may produce unexpected associations and innovative outputs. For example, an image might greatly change the model’s understanding and response to related text. This multimodal interaction not only increases the model’s expressive ability but also increases the unpredictability of its behavior.

In summary, the nonlinear relationship between inputs and outputs in LLMs is the source of their powerful capabilities, but it also brings a series of challenges. Understanding and managing this nonlinearity is key to developing more reliable and controllable LLMs. Future research may need to develop new methods to analyze and visualize these nonlinear relationships, as well as design techniques that can improve the predictability of the model while maintaining its flexibility.

4.1.2 Nonlinearity in Model Structures and Mechanisms

The nonlinear characteristics of large language models (LLMs) are not only reflected in the input-output relationships but are also deeply rooted in their internal structures and operating mechanisms. This intrinsic nonlinearity is the foundation of LLMs’ powerful capabilities, enabling them to capture and process complex patterns and relationships in language.

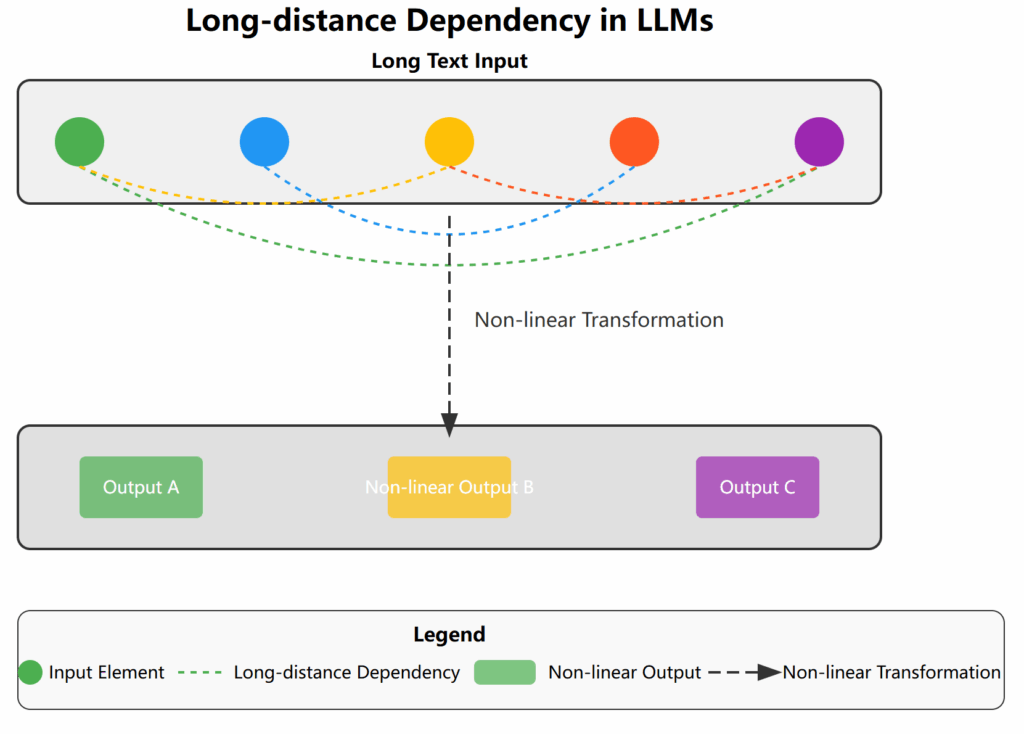

The nonlinearity of LLMs is first manifested in the widely used nonlinear activation functions. Common activation functions such as ReLU (Rectified Linear Unit) and GELU (Gaussian Error Linear Unit) introduce nonlinear transformations at each layer of the neural network, allowing the model to learn complex features and patterns. For example, the ReLU function (f(x) = max(0, x)) has a “turning point” at x=0, and this non-smoothness leads to a nonlinear relationship between inputs and outputs. The stacking of multiple nonlinear activations produces highly nonlinear input-output mappings, allowing LLMs to approximate almost any complex function.

To intuitively understand the nonlinear characteristics of different activation functions, we can look at the following chart:

[Figure 2: Comparison of the nonlinear characteristics of common activation functions]

The self-attention mechanism in the Transformer architecture is another key source of nonlinearity in LLMs. This mechanism is inherently nonlinear, dynamically calculating the relevance between various elements in the input sequence. The calculation of attention weights and the weighted sum process introduce complex nonlinear interactions. For example, the softmax function involved in weight calculation is a highly nonlinear operation that maps inputs to probability distributions within the (0,1) interval. The multi-head attention mechanism further increases the nonlinear complexity, allowing the model to focus on different aspects of the input simultaneously.

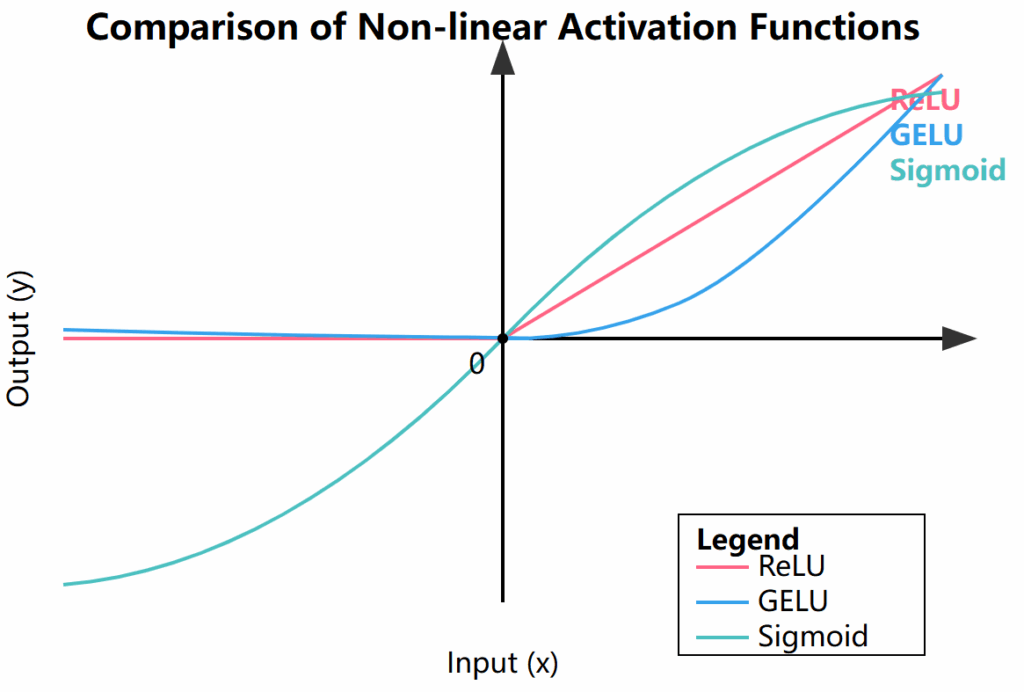

The hierarchical feature extraction mechanism of LLMs further enhances their nonlinear characteristics. The deep network structure allows the model to build increasingly abstract feature representations layer by layer. The nonlinear transformations at each layer accumulate, ultimately producing highly nonlinear input-output relationships. Modern LLMs typically have dozens or even hundreds of layers of neural networks, and this cascading effect produces extremely complex nonlinear mappings. For example, GPT-3 has 175 billion parameters distributed across 96 attention layers, and this deep structure can capture extremely complex language patterns and relationships.

The nonlinear effects of this deep structure can be intuitively understood through the following chart: [Figure 3: Diagram of hierarchical feature extraction in LLM]

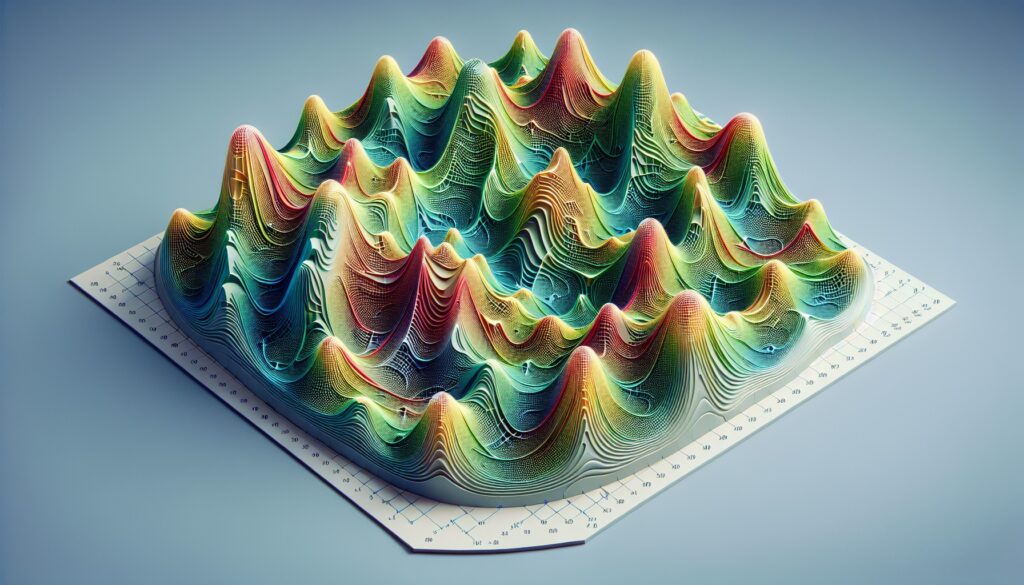

The following interactive 3D graph demonstrates the non-linear dynamic characteristics in large language models (LLMs), providing an intuitive way to understand the internal workings of LLMs. It helps researchers and developers visualize the non-linear properties of the model, understand the impact of attention mechanisms, and potentially discover interesting patterns or anomalies in model behavior.

[Interactive Graph 1: Non-linear Dynamic Characteristics in LLMs]

Graph explanation:

- The meaning of the three axes:

- X-axis (Input Token Embedding): Represents the input token embedding vector. In LLMs, each word or subword is converted into a numerical vector.

- Y-axis (Hidden State): Represents the model’s hidden state. This can be understood as the internal “thinking” process of the model.

- Z-axis (Pre-probability Activation (tanh)): This represents the pre-probability activation, reflecting the model’s prediction for the next word. In this simplified model, I used the tanh (hyperbolic tangent) function as the activation function. It shows the model’s intermediate layer output, rather than the final probability distribution. In actual large language models, this intermediate representation would undergo further processing to eventually transform into a true probability distribution. Positive values on the Z-axis can be interpreted as the model’s tendency to predict a specific output, while negative values can be interpreted as the model’s tendency not to predict a specific output. The absolute magnitude of these values indicates the strength of these tendencies.

- Shape of the surface: The surface in the graph shows how input and hidden state jointly influence the output. The non-flat nature of the surface intuitively demonstrates the non-linear characteristics of the model. If it were a linear relationship, we would see a flat plane.

- Color gradient: The color changes from purple to yellow, representing output probabilities from low to high. This helps us quickly identify which combinations of input and hidden states produce high-probability outputs.

- Attention weight slider: This slider allows us to adjust the strength of the attention mechanism. Attention is a key component of LLMs, determining how the model “focuses” on different parts when processing sequence data.

- Dynamic changes in the graph: As you move the slider, you’ll see the shape of the surface change. This demonstrates how the attention mechanism affects the overall behavior of the model:

- Low attention weight: The surface may be relatively flat, indicating that the model responds weakly to inputs.

- High attention weight: The surface may show more peaks and valleys, indicating that the model becomes more sensitive to certain combinations of inputs.

- Manifestation of non-linear characteristics:

- The twists and fluctuations of the surface demonstrate the complex non-linear relationships between input, hidden state, and output.

- Some areas may show steep changes while others are relatively smooth, reflecting the model’s varying sensitivity to different input combinations.

- Insights into model behavior:

- By observing the shape of the surface, we can understand which combinations of input and hidden states are more likely to produce high-probability outputs.

- The symmetry or asymmetry of the surface can reveal the model’s different responses to positive and negative inputs.

- Possibility of emergent behavior: Under certain attention weights, you might observe sudden changes in the surface shape, which could suggest emergent properties of model behavior — that is, under certain conditions, the model may exhibit unexpected complex behavior.

Overall, the nonlinear characteristics of LLMs are the root of their powerful capabilities. From the activation functions of individual neurons to complex attention mechanisms, to the hierarchical feature extraction of deep networks, nonlinearity permeates the entire structure and operating mechanism of LLMs. This multi-level, multi-faceted nonlinearity enables LLMs to capture and process complex patterns and relationships in human language, but it also increases the unpredictability and difficulty of interpretation of model behavior. Future research may need to develop new methods to understand and control these complex nonlinear interactions, as well as design new architectures that can improve the interpretability and controllability of the model while maintaining its expressive power.

4.1.3 Unpredictability of Model Behavior

The nonlinear characteristics of large language models (LLMs) not only endow them with powerful capabilities but also lead to significant unpredictability in their behavior. This unpredictability is manifested in multiple aspects, from microscopic input sensitivity to macroscopic emergent behavior, making the behavior of LLMs both fascinating and challenging.

Firstly, LLMs exhibit high sensitivity dependence. Due to internal nonlinear relationships, small changes in input can sometimes lead to huge changes in output, similar to the “butterfly effect” in chaos theory. For example, in a long text generation task, changing an early word might completely alter the subsequent generated content. This sensitivity makes precise control of model output extremely difficult.

Another surprising characteristic of LLMs is emergent behavior. These models often exhibit capabilities that were not explicitly trained, which spontaneously emerge during large-scale training processes. For example, GPT-3 and more advanced LLMs have demonstrated few-shot learning abilities, which were not explicitly specified during design and training. This emergent behavior is difficult to predict and control, and may lead to unexpected (sometimes creative) outputs.

The diversity of results is another important aspect of LLMs’ unpredictability. Even for the same input, LLMs may produce diverse outputs, especially when using random sampling strategies. This diversity reflects the richness of language itself but also increases the unpredictability of model behavior. For example, given a story opening, the model might generate completely different continuations each time.

LLMs also exhibit obvious long-tail effects. When dealing with rare or extreme cases, the model’s behavior is often difficult to predict, reflecting the long-tail distribution characteristics of real-world language use. These cases may trigger specific “paths” within the model, leading to unexpected outputs. For example, when dealing with specific professional terminology or rare language structures, the model may suddenly produce completely irrelevant or incorrect responses.

As the model scale increases, the complexity and unpredictability of its behavior also increase. Larger models may exhibit more emergent capabilities, but the specific manifestations and trigger conditions of these capabilities are often difficult to predict. This “scale effect” makes understanding and controlling large LLMs increasingly challenging.

Error propagation is another important aspect of LLMs’ unpredictability. Due to nonlinear relationships, small errors in the model may be amplified during the generation process, leading to a sharp decline in output quality. This “butterfly effect” makes predicting and controlling long text generation particularly difficult. For example, a small early error may cause the subsequently generated text to completely deviate from the expected topic or logic.

The sensitivity of LLMs to adversarial inputs is also an important manifestation of their unpredictability. Carefully designed inputs may cause the model to produce completely unexpected or inappropriate outputs. This sensitivity highlights the challenges of model safety and robustness. Adversarial attacks may exploit this feature to manipulate the model to generate harmful or erroneous content, posing a major challenge to the reliability of the model in practical applications.

Finally, the impact of training data on LLMs’ behavior is also difficult to fully predict. The behavior of models is largely influenced by their training data, but this influence is often non-linear and hard to predict. For instance, even if the content of the training data remains unchanged, simply altering the order in which the data is presented can lead to the model learning different features and patterns. This phenomenon occurs because models are particularly sensitive to initial data in the early stages of training. These early learning experiences have a “guiding” effect on subsequent training. If the initial data has specific patterns or characteristics, these patterns might be overemphasized, affecting the model’s understanding of the overall data distribution, and ultimately resulting in unexpected biases or knowledge gaps in the model. For example, if a language model is exposed to a large amount of technical documentation in its early training stages, it might tend to use technical terms and structures in subsequent text generation. This non-linear influence suggests that to cultivate models with greater generalization ability and robustness, we need to carefully plan and randomize the sequence of training data.

In summary, the unpredictability of LLMs is a by-product of their complexity and powerful capabilities. This unpredictability is both a challenge and an opportunity. It poses new questions for AI research, such as how to increase controllability and reliability while maintaining model flexibility and creativity. Future research may need to focus on developing new evaluation methods to quantify and predict model behavior, as well as designing new architectures and training strategies that can increase stability while maintaining performance.

4.1.4 Impacts and Challenges of Nonlinearity

Nonlinearity, as a core feature of large language models (LLMs), has profound impacts on their performance and applications, while also bringing a series of important challenges. Understanding these impacts and challenges is crucial for the future development and responsible application of LLMs.

Firstly, nonlinearity significantly enhances the expressive power of LLMs. This allows the model to learn and express complex language patterns and knowledge structures, which is a key factor in LLMs exhibiting human-like language abilities. For example, nonlinearity allows the model to capture metaphors, irony, and context-dependent meanings in language, which are difficult for linear models to achieve. This enhanced expressive power enables LLMs to perform excellently in various complex language tasks, from creative writing to complex problem-solving.

However, this powerful expressive ability also brings interpretability challenges. High nonlinearity makes the model’s decision-making process difficult to intuitively understand and explain. This poses severe challenges to the transparency and interpretability of AI systems, especially in areas that require high accountability, such as medical diagnosis or legal reasoning. For example, it’s difficult to trace how the model arrived at a particular conclusion, which not only affects users’ trust in the system but also creates difficulties for regulation and auditing.

Non-linearity also increases the difficulty of model training and optimization, making hyperparameter tuning more complex. It leads to a more complicated optimization landscape, potentially resulting in more local optima and increasing training instability. This necessitates the development of specialized optimization strategies and techniques, such as addressing the vanishing/exploding gradient problem and designing more effective learning rate scheduling strategies. Hyperparameters, such as learning rate, batch size, and regularization coefficients, are parameters that need to be manually set during model training. Due to the highly non-linear nature of LLM training processes, even small adjustments to hyperparameters can significantly impact the model’s final performance. For example, when training an LLM, if the learning rate is set too high, the model may update parameters too quickly in the early stages of training, leading to oscillations around local optima or even failure to converge. Conversely, if the learning rate is set too low, the training process will be very slow, potentially requiring an extremely long time to achieve satisfactory performance. This non-linear characteristic requires researchers to conduct meticulous experiments and optimizations when fine-tuning hyperparameters to find the optimal combination. At the same time, non-linear models typically require more computational resources to train and run, which not only increases economic costs but also raises environmental sustainability concerns. Therefore, balancing model performance, training efficiency, and resource consumption becomes a key challenge in the development process of LLMs.

[Figure 4: Illustration of the Optimization Landscape of a Nonlinear Model]

Nonlinearity also has important impacts on the model’s robustness. On one hand, it enhances the model’s generalization ability, enabling it to handle unseen situations. On the other hand, nonlinearity may lead to high sensitivity of the model to input perturbations, increasing the difficulty of designing robust LLMs, especially in adversarial environments. For example, small changes in input may lead to significant changes in model output, which could cause serious problems in safety-critical applications.

Nonlinearity also has a double-edged effect on the model’s generalization ability. On one hand, it significantly enhances the model’s ability to handle unseen situations, allowing LLMs to apply their learned knowledge in various novel contexts. This ability is a key factor in LLMs performing excellently in open-ended tasks. However, this enhanced generalization ability also brings the risk of over-generalization. Nonlinear models may, in some cases, over-generalize their learned patterns, leading to inappropriate or incorrect outputs. This over-generalization may manifest as model failures when handling edge cases, or vulnerability when facing deliberately designed adversarial inputs.

Addressing this double-edged effect requires balanced strategies in model design and training processes. This may include:

- Developing more advanced regularization techniques to control overfitting and over-generalization.

- Designing model architectures that can better quantify and express uncertainty.

- Introducing more edge cases and counterexamples in training data to improve the model’s ability to recognize anomalous situations.

- Developing more complex evaluation methods that not only test the model’s performance in common situations but also evaluate its behavior in edge cases and novel tasks.

By carefully considering and managing this double-edged effect of generalization ability, we can develop LLMs that can fully leverage the advantages of nonlinearity while avoiding its potential pitfalls. This is crucial for creating AI systems that are both powerful and reliable, especially in application areas that require high accuracy and reliability.

Model calibration also faces challenges brought by nonlinearity. Nonlinearity makes it difficult to accurately assess the model’s confidence, which may lead to the model being overconfident or underconfident in certain situations, affecting its reliability in practical applications. This issue is particularly important in tasks that require precise probability estimates, such as risk assessment or decision support systems.

Finally, nonlinearity may amplify biases in training data, affecting model outputs in complex and unpredictable ways. This makes identifying and mitigating biases in models more difficult, bringing important ethical challenges.

By deeply understanding these impacts and challenges of nonlinearity, we can better design, develop, and deploy the next generation of LLMs. The goal is to create AI systems that are not only powerful but also more reliable, transparent, and responsible. This requires interdisciplinary efforts, combining knowledge from machine learning, cognitive science, ethics, and other related fields to comprehensively address the complex challenges brought by nonlinearity.

Summary:

The nonlinearity of LLMs is one of their core characteristics as complex systems. This nonlinearity on one hand endows the model with powerful expressive abilities and flexibility, enabling it to handle complex language tasks; on the other hand, it also brings unpredictability in behavior, increasing the difficulty of understanding and controlling the model.

Understanding and addressing this nonlinearity is a key challenge in LLM research and application. It requires us to develop more advanced analysis tools and methods to better understand the internal working mechanisms of the model, improving its interpretability and controllability. At the same time, this nonlinearity also opens up new possibilities for innovative applications of LLMs, especially in tasks requiring creativity and adaptability. Future research may need to find a balance between leveraging the advantages brought by nonlinearity and controlling its potential risks, to develop more powerful, reliable, and controllable language models.

4.2 Emergence

Emergence is a core feature of complex systems, referring to system-wide properties or behaviors that cannot be explained or predicted solely from its components. In large language models (LLMs), emergence manifests as new abilities or behaviors that suddenly appear when the model reaches a certain scale, often capabilities that were neither explicitly programmed nor anticipated by designers and trainers.

4.2.1 Emergence of Language Understanding Capabilities

Large language models (LLMs) demonstrate language understanding capabilities that far exceed simple pattern matching or statistical learning, exhibiting characteristics of true “understanding”. This emergence of advanced language understanding is one of the most striking features of LLMs, not only changing our perception of machine language processing but also opening up new possibilities for artificial intelligence development.

Firstly, LLMs exhibit excellent context understanding abilities. They can understand complex contexts and situations, making appropriate interpretations even when faced with ambiguous or subtle expressions. For example, GPT-3 and GPT-4 can correctly understand homonyms or polysemous words based on context, an ability that emerges naturally during large-scale training rather than through explicit rule programming. This contextual understanding ability enables LLMs to handle complex language tasks such as semantic disambiguation and reference resolution.

LLMs also demonstrate deep semantic understanding capabilities. They can not only understand literal meanings but also capture abstract concepts and implied meanings, going beyond simple vocabulary matching. This includes understanding metaphors, sarcasm, cultural references, and other advanced language features. For example, when faced with expressions like “It’s raining cats and dogs,” advanced LLMs can understand that this is describing heavy rain, rather than the literal falling of cats and dogs. This ability emerges naturally in the process of learning from large-scale corpora, reflecting the model’s deep understanding of language use.

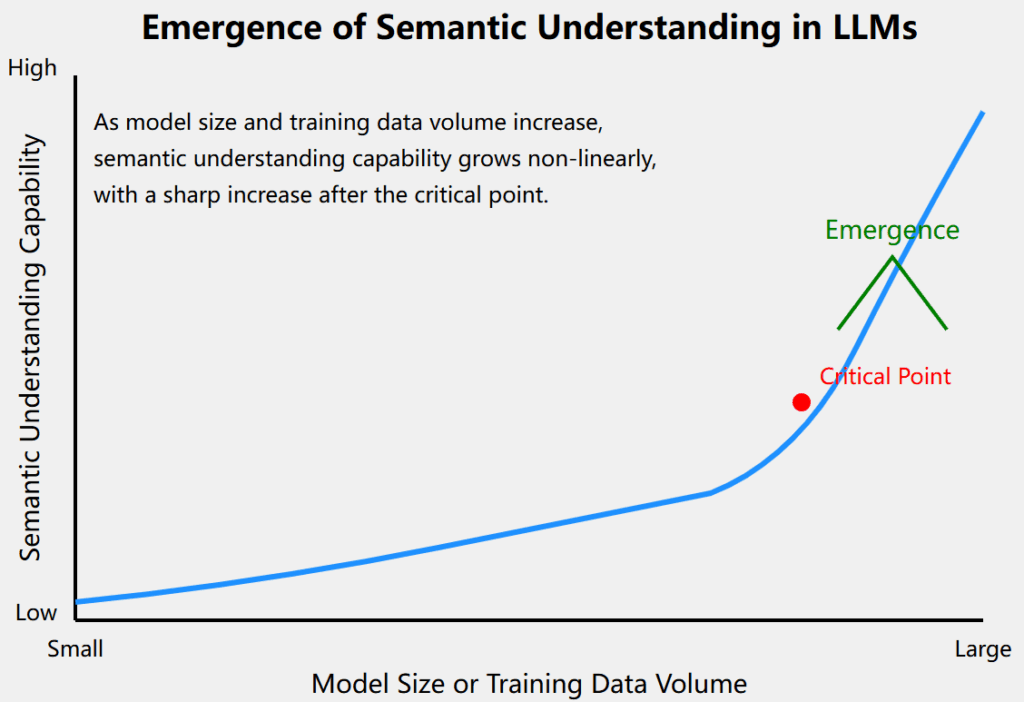

[Figure 5: The Emergence Process of LLM Semantic Understanding Ability]

The emergence of multilingual abilities is another important aspect of LLMs. Some large LLMs exhibit surprising cross-lingual understanding and translation capabilities, even though they were not specifically trained for this. This ability may stem from the language commonalities the model extracts from large amounts of multilingual data. For instance, GPT-3 has already demonstrated capabilities in zero-shot translation tasks, indicating that the model can establish deep connections between different languages.

LLMs also exhibit high sensitivity to grammatical structures. They can identify and generate complex grammatical structures, an ability that emerges naturally in the process of processing large amounts of text data, rather than through explicit grammar rule programming. This grammatical sensitivity allows LLMs to generate grammatically correct, structurally complex sentences, and even mimic the grammatical style of specific authors or writing styles.

Finally, LLMs demonstrate impressive cross-domain knowledge integration capabilities. They can integrate knowledge from different domains to form new insights, an ability that is not directly programmed. For example, a well-trained LLM might be able to apply physics concepts to economic problems, or connect historical events with current social issues. This ability to integrate cross-domain knowledge reflects the model’s deep understanding and flexible application of information, which is an important indicator of truly intelligent systems.

In summary, the emergence of language understanding capabilities in LLMs is a complex phenomenon involving multiple aspects such as context understanding, semantic comprehension, multilingual abilities, grammatical sensitivity, and cross-domain knowledge integration. This emergence not only challenges our traditional understanding of machine learning and artificial intelligence but also opens up new directions for future research and applications. However, although LLMs demonstrate these impressive capabilities, we still need to carefully evaluate the nature and limitations of these abilities, as well as their similarities and differences with human language understanding. Future research may need to delve deeper into understanding the mechanisms of these emergent capabilities, and how to better utilize and control these abilities to create more intelligent and reliable AI systems.

4.2.2 Emergence of Reasoning and Creativity

Large language models (LLMs) have demonstrated astonishing reasoning and creative abilities during their development process. The emergence of these abilities not only exceeded initial design goals but also opened up new possibilities for artificial intelligence applications in multiple fields. This emergence is manifested in logical reasoning, complex problem solving, common sense application, creative expression, and cross-domain knowledge transfer, among other aspects.

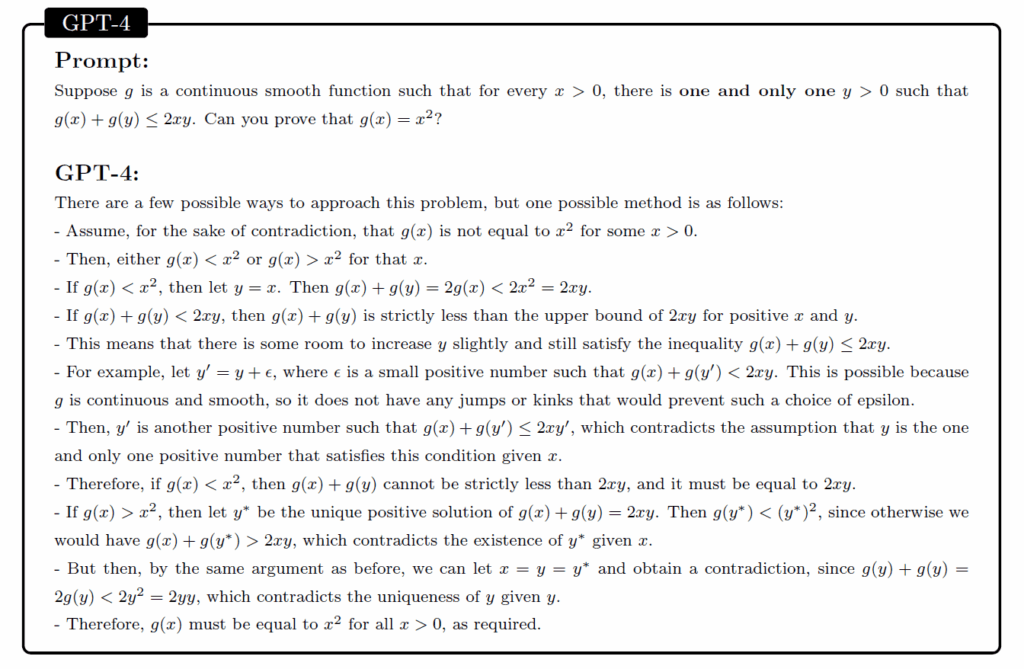

In terms of logical reasoning, LLMs have shown significant progress. They can perform basic logical reasoning, such as causal inference, hypothesis testing, and conditional reasoning. As the model scale and complexity increase, more advanced LLMs also demonstrate the ability to solve complex mathematical problems and engage in programming tasks. For example, GPT-4 and other advanced LLMs exhibit step-by-step reasoning abilities when faced with mathematical problems, capable of breaking down problems, formulating solution strategies, and executing calculation processes step by step. Similarly, in the programming field, models like CodeX and Code Llama demonstrate comprehensive abilities to understand problem requirements, design algorithms, and generate code.

According to the latest research data, GPT-4 has shown amazing capabilities in multiple standardized tests. For example, in the LSAT (Law School Admission Test), GPT-4’s performance surpassed 90% of human test-takers. In the verbal reasoning section of the GRE, its score was equivalent to the 99th percentile of human test-takers. These data not only prove LLMs’ reasoning abilities in academic and professional fields but also show their potential in understanding and applying complex concepts.

This emergence of reasoning ability is particularly evident in complex problem solving. LLMs can handle complex tasks that require multiple steps and cross-domain knowledge, such as advanced math problems, physics problems, or complex logical puzzles. In solving these problems, the model exhibits thought processes similar to human experts: analyzing problems, extracting key information, applying relevant knowledge, and reaching conclusions through multiple reasoning steps. This ability not only requires deep understanding of knowledge in various fields but also flexible application of this knowledge, reflecting characteristics of advanced cognitive functions.

For example, in the image below, GPT-4 demonstrates excellent performance in solving a problem from the 2022 International Mathematical Olympiad.

It’s worth noting that these reasoning and problem-solving abilities were not direct goals of model design, but naturally formed in the process of processing large amounts of text data. They only became apparent when the model scale reached a certain level, exhibiting typical emergent characteristics. This phenomenon has sparked deep thoughts about the nature of artificial intelligence: is there a critical point beyond which quantitative changes lead to qualitative changes, enabling AI systems to acquire reasoning abilities similar to humans?

In addition to formal logic and complex problem solving, LLMs also demonstrate the ability to understand and apply everyday common sense. This ability may stem from implicit knowledge extracted and synthesized by the model from large amounts of text. For example, the model can understand and answer questions involving physical common sense, social norms, etc., which far exceeds simple fact memorization. This common sense reasoning ability makes LLMs perform more naturally and intelligently when dealing with real-world problems.

In terms of creativity, LLMs’ performance is equally surprising. They can create poetry, stories, scripts, and even generate creative advertising copy. This creativity is not just a recombination of known information, but includes the generation of novel and coherent content. For example, GPT-4 can create complex novel chapters based on simple prompts, demonstrating a profound understanding of narrative structure, character development, and plot development. This emergence of creativity is not only changing the way content is created but is also reshaping multiple creative industries such as publishing, advertising, and entertainment. Multimodal models go further, being able to output exquisite images and videos, demonstrating cross-media creative abilities. For example, models like DALL-E and Midjourney can generate unique images based on text descriptions, while models like Sora and KlingAI can generate videos that are excellent in terms of image realism, aesthetic quality, and video motion sense.

However, this emergence of creativity also brings a series of new problems and opportunities. At the legal level, how should AI-generated creative works be positioned under the existing intellectual property framework? At the artistic level, does AI’s creativity change our understanding of the nature of artistic creation? At the practical level, how can we most effectively utilize AI’s creativity to enhance human creative processes? These questions require our deep thought and discussion.

Another notable emergent property of LLMs is their ability to transfer knowledge across domains. They can combine seemingly unrelated concepts to produce novel ideas or solutions, applying knowledge from one field to seemingly unrelated fields. For example, in scientific research, LLMs have already been used to assist in literature reviews, helping researchers quickly summarize and analyze large amounts of scientific literature, and even propose new research hypotheses. In the field of materials science, researchers are exploring the use of LLMs to assist in the discovery and design of new materials by analyzing large amounts of scientific literature and experimental data. The application of LLMs in the medical field is also increasing. They analyze medical literature and case reports to assist doctors in making more accurate diagnoses and treatment decisions. These models can quickly summarize research findings, identify disease patterns, provide personalized treatment suggestions, check drug interactions, and help patients understand medical information. Additionally, they can match clinical trials, update medical knowledge, process multimodal data, and conduct predictive analysis. These cross-domain knowledge applications demonstrate the huge potential of LLMs in promoting interdisciplinary integration and innovation.

However, despite LLMs performing excellently in multiple fields, they still have some inherent limitations. For example, these models may perform poorly in tasks that require real-time updated information, in-depth professional knowledge, or real-world experience. In high-risk areas such as medical diagnosis or legal consultation, LLMs may produce seemingly reasonable but actually inaccurate or potentially dangerous advice. Moreover, LLMs may also have bias problems, which come from their training data and may lead to unfair or discriminatory outputs. Recognizing these limitations is crucial for responsible development and use of these technologies.

The emergent capabilities of LLMs are profoundly changing our ways of working and learning. In the field of education, these models may become powerful tools for personalized learning, providing students with instant feedback and customized learning materials. For example, a student can engage in dialogue-style learning with an LLM, deeply exploring complex scientific concepts or historical events. In the workplace, LLMs may change the workflow of many industries. For example, in software development, programmers may play more of a ‘prompt engineer’ role, focusing on designing the best prompts to guide AI in generating code, rather than manually writing every line of code. This shift may not only improve efficiency but also create new job opportunities and career paths.

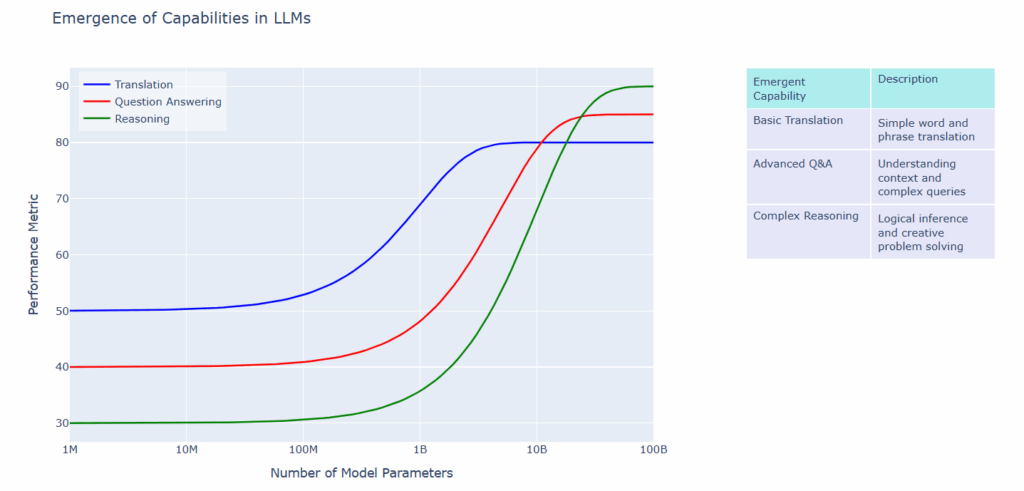

The following simplified diagram visually demonstrates the phenomenon of emergent capabilities in LLMs as they increase in scale, showing the non-linear relationship between model parameters and performance on three key tasks: translation, question answering, and reasoning.

[Figure 6: Emergence of Capabilities in LLMs]

As evident from Figure 6, the emergence of capabilities in LLMs is a complex, non-linear process. This complexity underlies many of the challenges in evaluating and measuring these emergent capabilities. Evaluating and measuring the emergent capabilities of LLMs remains a challenging field. Currently, researchers mainly rely on various standardized tests and task-specific benchmarks to evaluate these models. For example, MMLU (Multi-task Language Understanding benchmark covering 57 subjects), TruthfulQA, and HumanEval are widely used to evaluate multiple aspects of model capabilities. However, these methods may not fully capture true ’emergent’ capabilities. Future evaluation directions may need to develop more complex, cross-domain tasks, or even dynamically generated challenges to test the model’s performance in new, unforeseen situations. In addition, developing methods to evaluate the creativity and originality of models is becoming increasingly important, although this is inherently subjective and challenging.

Performance of different LLMs in various evaluations:

| Model | MMLU | TruthfulQA | GSM8K | RACE | HumanEval | BBH |

|---|---|---|---|---|---|---|

| GPT-4 | 86.4% | 76.7% | 94.1% | 90.2% | 84.5% | 80.2% |

| Claude 3 Opus | 84.7% | 73.9% | 92.8% | 88.1% | 82.3% | 78.5% |

| Gemini Ultra | 87.0% | 75.1% | 95.2% | 91.0% | 85.0% | 81.5% |

| Llama 3 | 83.2% | 71.8% | 91.5% | 87.4% | 81.0% | 77.6% |

| Mistral Large | 84.0% | 74.0% | 92.0% | 89.0% | 82.0% | 78.0% |

The table above summarizes the performance of different large language models (LLMs) in multi-task language understanding (MMLU), truthful question answering (TruthfulQA), basic math reasoning (GSM8K), reading comprehension (RACE), code generation (HumanEval), and complex reasoning tasks (BBH). Overall, the performance of each model in different areas reflects the differences in their design and optimization, allowing users to choose the most suitable model based on specific needs.

Overall, the emergent capabilities of LLMs in reasoning and creativity are redefining our understanding and expectations of artificial intelligence. These capabilities not only demonstrate the potential of the model but also point the way for future research and applications. Future research not only needs to focus on how to further enhance these capabilities but also needs to explore how to better apply them to practical problem solving while ensuring their safety and ethical use. As LLMs continue to develop, we may see more surprising emergent capabilities, which will further push artificial intelligence towards more intelligent and creative directions.

4.2.3 Other Emergent Properties Exhibited by LLMs

In addition to reasoning and creativity, large language models (LLMs) exhibit a series of other astonishing emergent properties that further demonstrate the complexity and potential of these models.

- Meta-learning ability: LLMs demonstrate the ability to “learn how to learn,” capable of quickly adapting to new learning paradigms. This ability enables them to understand and execute new tasks through a few examples, exhibiting few-shot learning characteristics. For example, GPT-3 can understand task requirements and provide high-quality outputs with just a few examples when completing new tasks. This meta-learning ability not only improves the adaptability of the model but also opens up new possibilities for transfer learning and continuous learning.

- Self-correction: Some large LLMs demonstrate the ability to identify and correct their own mistakes. This ability of self-reflection and correction is a manifestation of advanced cognitive functions, similar to human self-monitoring and error correction processes. For example, when GPT-4 discovers an error in complex mathematical problem solving, it can independently point out the error and provide corrected solution steps. This ability not only improves the accuracy of model outputs but also enhances user trust in the model.

- Emotional understanding and emotional intelligence: LLMs demonstrate the ability to understand and generate emotional content, including identifying emotional tones in text and generating emotionally appropriate responses. The emergence of this emotional intelligence makes the model perform more naturally and humanely in human-machine interactions. For example, GPT-4 can recognize the emotional state of users and adjust the tone and content of their replies accordingly, providing a more considerate interaction experience.

- Context switching: The model can flexibly switch between different conversational contexts and roles, demonstrating adaptability similar to humans. This ability enables LLMs to maintain coherence in multi-turn conversations while adapting to different scenarios and needs. For example, the same model can seamlessly switch between technology discussions, creative writing, and daily casual conversations, adopting appropriate language styles and knowledge backgrounds each time.

- Multimodal understanding: Some advanced LLMs demonstrate the ability to process and integrate multiple modal information such as text, images, sound, and video. This ability enables the model to perform complex tasks such as image description and real-time visual question answering. For example, GPT-4o can analyze video content provided by cameras in real-time, understand information in charts and graphics, and even answer complex questions about video images. This multimodal understanding ability greatly expands the application range of LLMs, enabling them to understand and interact more comprehensively.

- Metacognitive ability: Some advanced LLMs begin to show awareness of the boundaries of their own knowledge, able to express uncertainty or acknowledge limitations in their knowledge. The emergence of this metacognitive ability is a significant sign of model complexity. For example, when faced with questions beyond their knowledge range or training data, advanced LLMs can explicitly state “I’m not sure” or “This is beyond my knowledge range,” rather than generating potentially inaccurate answers. This ability is crucial for building reliable and transparent AI systems.

- Metaphor understanding and generation: The model can understand and create complex metaphors, which is also a manifestation of advanced language abilities and creative thinking. LLMs can not only identify and interpret existing metaphors but also create new, meaningful metaphorical expressions. This ability has wide application potential in fields such as literary creation, advertising copywriting, and cross-cultural communication.

Impacts and Challenges of Emergence:

- Complexity of model evaluation: Emergent behaviors make it difficult to comprehensively evaluate the capabilities of LLMs, as we may not be able to anticipate all possible emergent abilities. Traditional evaluation methods may not be able to capture these newly emerged capabilities, requiring the development of more comprehensive and dynamic evaluation frameworks. For example, researchers are exploring the use of open-ended tasks and cross-domain challenges to evaluate the emergent capabilities of LLMs.

- Ethical and safety considerations: Emergent capabilities may bring unexpected ethical challenges and security risks, requiring continuous monitoring and evaluation. For example, the creative and reasoning abilities of the model may be used to generate misleading information or circumvent safety measures. This requires us to establish robust ethical frameworks and security protocols to ensure the responsible use of LLMs.

- Implications for research directions: Emergence provides new ideas for AI research, such as exploring how to induce and control beneficial emergent behaviors. Researchers are investigating how factors such as model scale, training data diversity, and training methods affect the generation of emergent capabilities. These studies may lead to more targeted AI system design methods.

- Application potential: Emergent capabilities open up new application areas for LLMs, but also require careful evaluation of the reliability and limitations of these new capabilities. For example, the meta-learning ability of LLMs may revolutionize education and training methods, but it is necessary to ensure the effectiveness and safety of these applications in real environments.

- Theoretical challenges: Explaining the mechanisms of emergent behaviors remains an open research question, which is important for in-depth understanding of artificial intelligence and cognitive science. Researchers are exploring the complex dynamics of neural networks, information processing theory, and cognitive science models to explain emergent phenomena in LLMs.